Microsoft CEO Satya Nadella has – finally – addressed the recent surge of sexually explicit AI-generated deepfake images of Taylor Swift that circulated widely on social media, and the resulting fallout. Nadella emphasized the urgency of addressing AI misuse, commented on the importance of a safe online world for both content creators and consumers.

Artificial intelligence (AI) technology has been in the public and government crosshairs recently, with good reason. High profile lawsuits have been filed as the ethics and legality are questioned about using private and proprietary data to train AI without consent or compensation.

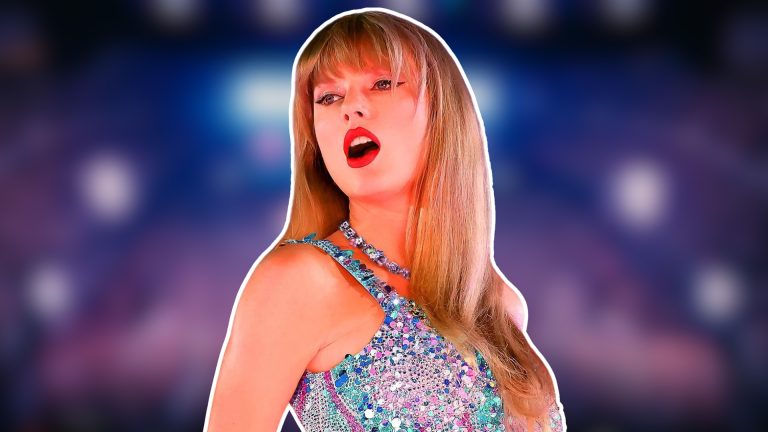

However, it was the exploitation of pop superstar Taylor Swift’s likeness for AI-generated pornographic imagery may be the straw that breaks the camel’s back – and that camel may be named Microsoft.

Tonight on Nightly News, Lester asks the CEO of Microsoft what the industry can do to stop sexually explicit deepfakes like the one of Taylor Swift that was circulating online.

— NBC Nightly News with Lester Holt (@NBCNightlyNews) January 26, 2024

In an interview with NBC Nightly News anchor Lester Holt, the Microsoft CEO expressed his concern, labeling the proliferation of nonconsensual simulated nudes as “alarming and terrible.” Plus, Nadella underscored the need for immediate and definite action within the tech industry to ensure a safer online environment for content creators and consumers alike.

Despite Microsoft’s efforts to curb the spread of explicit deepfakes, the images were viewed over 45 million times on X before their removal, prompting outrage from the public. The Elon Musk-owned social media platform imposed a temporary block on searches related to Taylor Swift on the platform.

The controversy surrounding the music artist’s NSFW AI images prompted NBC’s Holt to question Nadella about the situation, referring to the internet as “exploding with fake, sexually explicit images of Taylor Swift.” Nadella acknowledged the severity of the issue, stating, “I think it behooves us to move fast on this.”

You might ask: why is Microsoft singled out for the Taylor Swift AI incident? According to certain reports, it was specifically the text-to-image program called Microsoft Designer powered by OpenAI’s Dall-E 3, that was used in the creation of the explicit deepfake images of Taylor Swift.

Original article here: https://t.co/XP5QivOCzL

— Joseph Cox (@josephfcox) January 29, 2024

The images, portraying nudity and sexually explicit acts while surrounded by Kansas City Chiefs players, quickly spread across social media platforms like X (formerly Twitter) and Reddit. The incident raised concerns about potential legal repercussions, leading Microsoft to take action.

To address the misuse of its AI technology, Microsoft implemented an update to Designer, introducing “guardrails” to prevent the creation of non-consensual photos. The company stated its commitment to investigating the issue and taking appropriate action, including potential consequences for users violating its Code of Conduct by creating deepfakes.

The controversy extends beyond Microsoft, with social media platforms like X facing criticism for allowing the dissemination of synthetic and manipulated media, violating their own policies. The White House weighed in on the deepfakes trend, describing it as “very alarming,” and expressed a commitment to addressing the issue.

The incident involving Taylor Swift is not the first time Microsoft has faced challenges related to AI. In 2016, the company encountered trademark issues with the pop singer concerning the name ‘Tay’. Microsoft had planned to bring its Chinese chatbot, XiaoIce, to the US under this name.

However, Swift claimed ownership of the name ‘Tay’ and threatened legal action against Microsoft, citing a false association between her and the chatbot. As luck would have it, Taylor Swift’s concerns were justified by the Tay chatbot later producing misogynistic and racist output.

The recent deepfake controversy underscores the broader challenges faced by tech companies in managing the ethical implications of AI technology. The incident with Taylor Swift highlights the urgent need for industry leaders, including Microsoft, to implement robust safeguards and regulations to prevent the misuse of AI-generated content.

As the debate on AI deepfakes continues, Microsoft and entities engaged in AI-image generation like Midjourney and Stable Diffusion may find themselves subject to increased legal, legislative, and regulatory scrutiny.

The incident serves as a call to action for the industry to prioritize safety, responsible AI principles, and the development of effective content filtering, operational monitoring, and abuse detection mechanisms.

The entire situation also sheds light on how lackluster the regulations and protections against AI exploitation have been thus far. For if a celebrity with as much recognition and influence as Taylor Swift can be the victim of these exploitative AI, then where does that leave ordinary people?

The sexually explicit AI-generated deepfakes of Taylor Swift have ignited a broader discussion on the ethical use of AI technology. Microsoft’s response reflects recognition of the challenges posed by the misuse of AI and the need for governments to implement proactive measures to create a safer online environment for users.