Tech companies should compensate news publishers for training AI models on their copyrighted content. Oh, sorry, we’re not just stating a fact here – this is the unanimous response of top media experts who testified before the US Senate Committee on the Judiciary this week.

Over the years, journalism has adapted to new technologies, with the internet prompting a shift from print to online platforms. Publishers adjusted their strategies to optimize search engine rankings, attracting readers and digital advertisers. And so, here we are!

However, the advent of large language models like OpenAI’s ChatGPT capable of automatically generating text while unethically sourcing datasets not intended for commercial use raises new challenges for the industry. This issue has gained wide public interest after The New York Times filed its billion-dollar lawsuit against OpenAI.

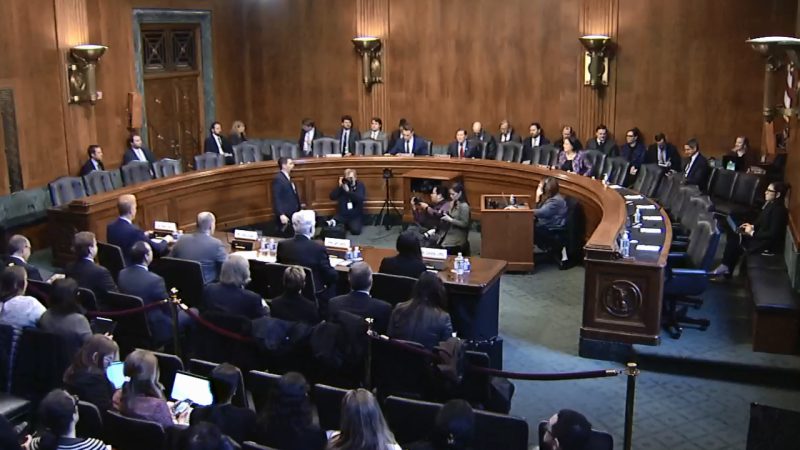

The Subcommittee Hearing was presided by Senator Richard Blumenthal on January 11, 2024. The U.S. lawmakers sought insights from leaders in media trade associations and academia regarding the impact of generative AI on the journalism industry.

LIVE: Can local newspapers & broadcast stations—the lifeblood of our communities—survive in the era of AI? My Subcommittee is diving into this question & its implications for the future of journalism & oversight of AI. https://t.co/HbIPx6Tqxp

— Richard Blumenthal (@SenBlumenthal) January 10, 2024

Generative AI models, trained on extensive internet data, can create various types of content. The New York Times’ lawsuit against OpenAI accused the startup of unlawfully scraping millions of its copyrighted articles and profiting from generating passages of its articles verbatim, allowing users to bypass the paywall.

In response, publishers are advocating for compensation and negotiating licensing agreements to regain control from tech companies. The legal landscape remains uncertain about how generative AI violates existing copyright laws. AI developers argue that their use of internet-scraped content falls under fair use, asserting that the generated text transforms and transcends the original material.

During the Senate hearing Roger Lynch, CEO of magazine publisher Condé Nast, contested the fair use argument and emphasized that fair use should not merely enrich tech companies that resist payment and suitable crediting.

Danielle Coffey, CEO of the News/Media Alliance trade association, also highlighted additional concerns, noting that chatbots, such as Microsoft Bing or Perplexity, designed to crawl the web and summarize articles, could further impact publishers by reducing website traffic and ad revenue.

To sustain the journalism industry, experts recommend negotiating licensing agreements that enable media outlets to profit from generative AI while avoiding hindrances for smaller developers creating their own large language models.

While the majority of executives and experts that testified were in favor of regulation and enforcing licenses for AI companies, Jeff Jarvis, a retired professor from the City University of New York’s Craig Newmark Graduate School of Journalism, cautioned against it. He argued that it could set precedents affecting journalists and small open-source companies competing with major tech corporations.

It should be noted that journalism is only one area of many where datasets are exploited for training AI. Just recently, AI-powered image generators like Midjourney and Stable Diffusion have been on the receiving end of critical feedback for apparent infringement and potentially harmful CSAM and CP content in the datasets these draw from.

In relation to this, OpenAI CEO Sam Altman recently met with Republican U.S. House of Representatives Speaker Mike Johnson to discuss the risks and promises of AI. As reported by Reuters, the meeting addressed concerns about AI’s potential disruption to democratic processes, increased fraud, and job losses.

Although the Biden administration has pushed for AI regulation, progress in the polarized U.S. Congress has been limited. On the other hand, the European Union has taken the lead in developing regulations around AI, with lawmakers drafting rules to address various concerns related to the technology.

OpenAI, initially founded as an open-source nonprofit, shifted to a capped-profit structure in 2019, with Microsoft taking a non-voting, observer position on the company’s board in November. Illustrating the chaos within the company due to external and internal AI issues, Sam Altman had been fired by the board of directors then brought back as CEO within the same month.

Altman expressed anticipation after meeting with Johnson regarding the legislative process and the ongoing efforts to strike the right balance between fostering innovation and addressing potential risks. He has gone on to make blanket statements that AGI (Artificial General Intelligence) is “relatively soon” and that his company’s GPT-5 will be the next step in accomplishing this.

However, AI experts such as Chomba Bupe are dismissive of Altman’s statements, referring to Altman and other AI corporate spokesmen as “carrot danglers”. Essentially, AI companies rely on hyperbole and FOMO (fear of missing out) as a form of false advertising, while sidestepping the ethical and legal issues surrounding how data is used in training their AI models.

Haven't you noticed by now?

These rich business folks are "carrot danglers".

We are always 2-5 yrs away from AGI according to them, just ask Elon Musk.

They prey on VCs with FOMO & it's a lucrative business to dangle the carrot like this. https://t.co/v8LSaQBNoM

— Chomba Bupe (@ChombaBupe) January 12, 2024

As the public becomes increasingly aware of the growing AI issues and controversies, the discussions in Congress underscore the importance of responsible AI development and regulation to ensure an equitable compensation in return for integration of technology into various human-centric disciplines and industries, including art and journalism. Is there a true common ground that can make this happen?